Performance Characterization of Cooperative Localization and SLAM

-

The goal of this project is to provide theoretical tools for characterizing the accuracy of robot localization. In particular, our work focuses on three classes of localization problems:

1. Cooperative Localization (CL)

2. Simultaneous Localization and Mapping (SLAM)

3. Cooperative SLAM (C-SLAM)

Mobile robot teams have attracted the interest of the robotics community, because of the increased efficiency and reliability resulting when multiple robots cooperate for performing a task. In particular, localization (CL, SLAM, and C-SLAM) has been the most active research area of mobile robotics for the past two decades. This is due to the fact that it permits accurate pose estimation in unknown environments necessary for autonomy.

However, most of the research work on CL, SLAM, and C-SLAM has focused on implementation techniques and on addressing the problem of computational complexity. The issue of analytical evaluation of performance has been largely ignored in the literature, with only few exceptions of limited scope. As a result, questions such as "How much more accurate will the position estimates become if the odometry noise variance is reduced by 50%?", or "Will a particular set of sensors allow a robot to achieve localization errors smaller than 1m after 1h of operation?" can only be answered after extensive simulations and/or time-consuming experimentation. Clearly, this is a significant obstacle for efficient robot design. Our work aims at providing analytical means for answering such questions.

-

The primary contribution of this work is the derivation of theoretical tools for evaluating the performance, in terms of positioning accuracy, of CL, SLAM, and C-SLAM. In particular, we present analytical expressions that determine the guaranteed accuracy of localization, as a function of the following system-design parameters:

- the accuracy of the robots' sensors

- the number of robots and features

- the structure of Relative Position Measurement Graph (RPMG) that describes the measurement topology between the robots and/or landmarks

- the statistical properties of the robots' trajectories

- the spatial distribution of features, in SLAM and C-SLAM

-

Our specific goal is to study the properties of the state covariance matrix during pose estimation, since this matrix is a concrete measure of the uncertainty. The main challenge with this problem is that the models describing both the motion and the measurements of robots moving in 2D and 3D are generally nonlinear. As a result, the Extended Kalman Filter (EKF), rather than the linear Kalman filter, is applied for state estimation, and the Riccati recursion, which describes the time evolution of the covariance, is time-varying. For this Riccati no closed-form solution exists in the general case.

- the worst-case uncertainty of the position estimates, and

- the expected uncertainty of the position estimates

Therefore, in order to analytically study the properties of CL for robots moving in 2D, we resort to the derivation of upper bounds for the covariance of the position estimates. In particular, in our work we derive upper bounds on

In order to compute the upper bounds on the worst-case uncertainty, our approach is based on deriving a "bounding" LTI system, whose covariance at every time step is provably an upper bound of the covariance in the original, nonlinear system. This is achieved by determining upper bounds on the system noise covariance matrix and the relative position measurement covariance matrix. By obtaining the steady-state solution of the Riccati corresponding to this LTI system, we are able to predict the guaranteed asymptotic accuracy of localization. A similar approach, based on defining a different "bounding" LTI system, whose covariance is provably an upper bound to the expected uncertainty of the original nonlinear system, is followed for characterizing the expected performance of the system.

-

The performance of this algorithm has been demonstrated in both in real-world experiments, and in extensive simulation tests. We here describe the results of: (i) a large-scale experiment in an uncontrolled urban environment, and (ii) an experiment in a typical office environment.

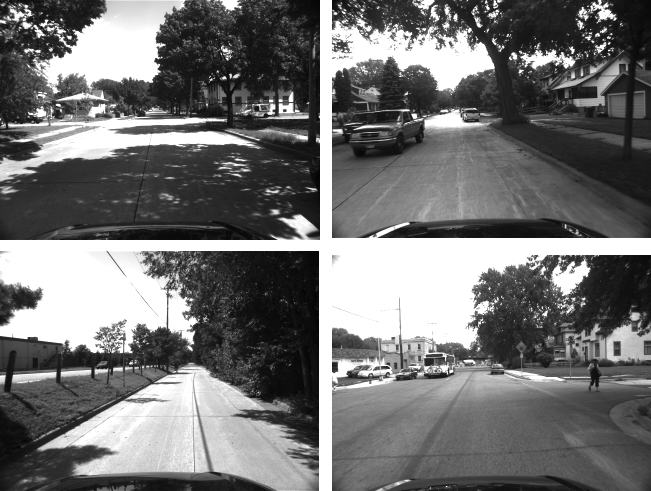

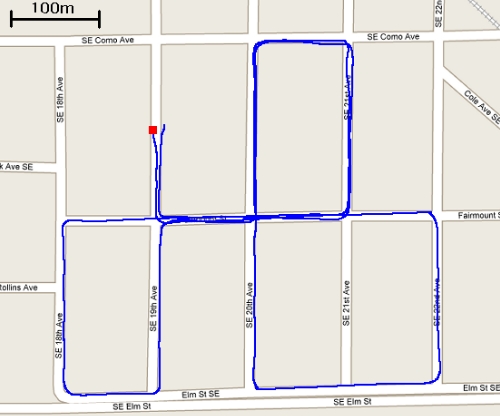

For the outdoor experiment an IMU/camera sensor payload was mounted on top of a car moving in a residential area of Minneapolis. The car traveled a total distance of approximately 3.2 km, over a time period of 9 min. The camera used for this experiment is an IEEE 1394 camera, recording images of resolution 640 x 480 pixels at a rate of 3 Hz, while the IMU is an Inertial Science ISIS IMU, recording inertial measurements at 100 Hz.

Some example images from the recorded dataset are displayed below:

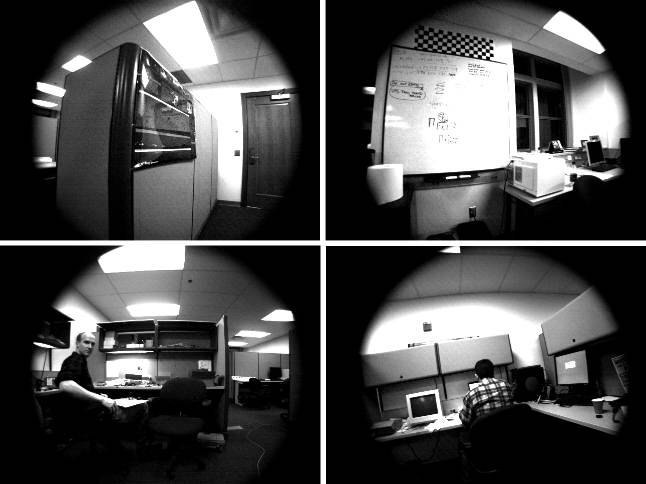

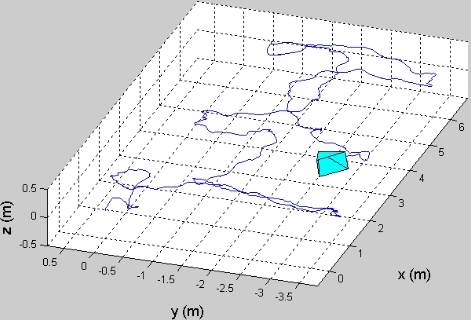

In addition to the above outdoor experiment, we have also tested the algorithm's performance in an indoors experiment. In this case, the IMU/camera system moves in a typical office setting (the main MARS lab office). Some example images from the sequence are the following:

>

- C1. A.I. Mourikis, S.I. Roumeliotis: "A Multi-state Constraint Kalman Filter for Vision-Aided Inertial Navigation," In Proceedings of the IEEE International Conference on Robotics and Automation, April 10-14 2007, Rome, Italy, pp. 3565-3572.

-

This work was supported by the University of Minnesota (DTC), the NASA Mars Technology Program (MTP-1263201), and the National Science Foundation (EIA-0324864, IIS-0643680).