Pin-point Landing based on Mapped Landmark Observatios

- JPL/NASA

-

In this project we have developed an Extended Kalman Filter (EKF)-based algorithm for estimating the pose and velocity of a spacecraft during Entry, Descent and Landing (EDL). The proposed estimator combines measurements of rotational velocity and acceleration from an Inertial Measurement Unit (IMU) with observations of a priori Mapped Landmarks (MLs), such as craters or other visual features, that exist on the surface of a planet. The tight coupling of inertial sensory information with visual cues results in accurate, robust state estimates, available at a high bandwidth. The dimensions of the landing uncertainty ellipses achieved by the proposed algorithm are three orders of magnitude smaller than those possible when relying exclusively on IMU integration.

Spacecraft 3D position and orientation, obtained by integration of IMU measurements, are updated using camera observations of ground features with known global coordinates. These are obtained from satellite orthoimagery, combined with digital elevation maps. Feature matching and extraction can be done by a number of different approaches, e.g. using crater detection algorithms, SIFT keys, or by 2D correlation between map and camera image. Techniques such as RANSAC, Mahalanobis distance gating, state augmentation, and iterated extended Kalman filter updates, are used to address outlier rejection, image processing delays and the nonlinear measurement model.

Experimental Results: The algorithm has been successfully tested on actual NASA datasets, e.g., those used to validate the Descent Image Motion Estimation System (DIMES) for the Mars Exploration Rover (MER) missions, and one acquired during the Mars Science Lab (MSL) subsonic parachute drop test. In both data sets, our algorithm achieved final position and orientation uncertainty of less than 3 m and 0.5 deg in magnitude.

-

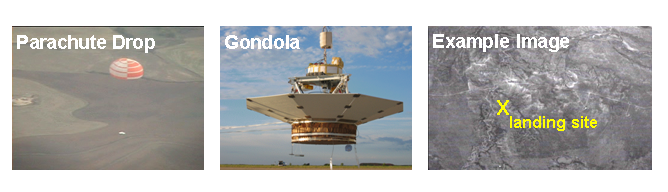

Pin-Point Landing Experiment on the NASA MSL Subsonic Parachute Drop Test (PDT). The gondola (top center) was attached to a balloon that carried it to approximately 36 km altitude and then released it for descent on a parachute (top left). During the descent, a nadir-pointing camera recorded images of the landing site (top right), and an IMU provided measurements of acceleration and rotational velocity.

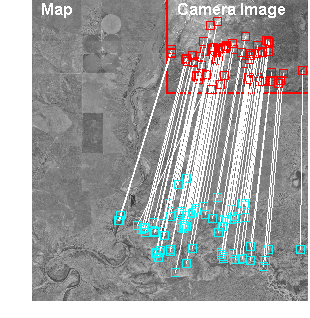

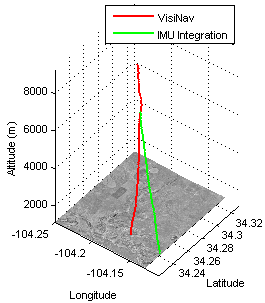

Examples of matched SIFT features from this experiment are shown below (left), between an aerial image from the Parachute Drop Test and a map of the landing area, given in the form of an 11x12 km patch of grayscale orthoimagery. The image was taken at an altitude of approximately 3.5 km above ground. The trajectory of the parachute is shown on the right, with the green line based on pure IMU integration (dead-reckoning), and the red line showing the estimate of our proposed filter.

-

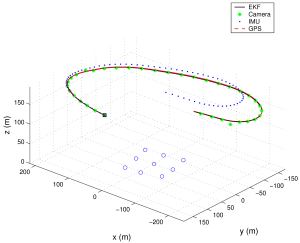

Experimental setup and payload used for the DIMES field testing (left). The helicopter flew over several test sites, recording images of artificial, GPS-surveyed targets (right). A sample image recorded by the camera is shown below, with the detected targets denoted by black squares.

-

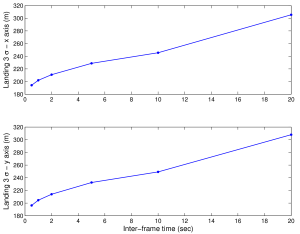

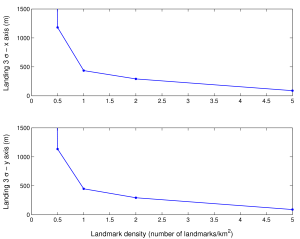

An additional set of simulation results characterizes the algorithm's dependence on design parameters, such as image frame rate (bottom left), ML density (bottom right), or detection accuracy. Altogether, this provides a powerful tool for space mission designers.

- C1. N. Trawny, A. I. Mourikis, S. Roumeliotis, A. E. Johnson, and J. Montgomery. Vision-aided inertial navigation for pin-point landing using observations of mapped landmarks. Journal of Field Robotics, Special Issue on Space Robotics, vol. 24, no. 5, pp. 357-378, 17 Apr. 2007.

-

This work was supported by the University of Minnesota (DTC), the NASA Mars Technology Program (MTP-1263201), and the National Science Foundation (EIA-0324864, IIS-0643680).