- Summary

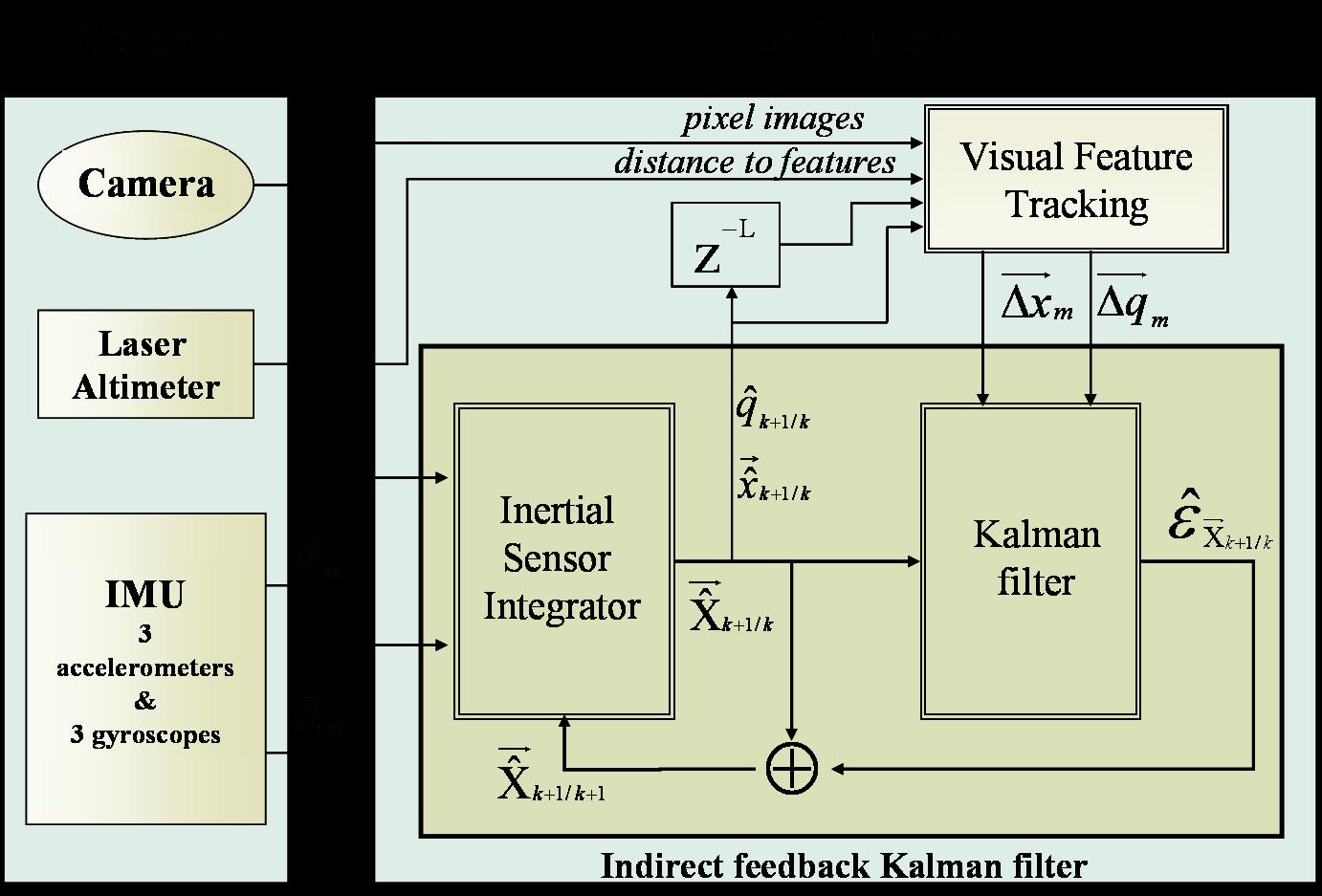

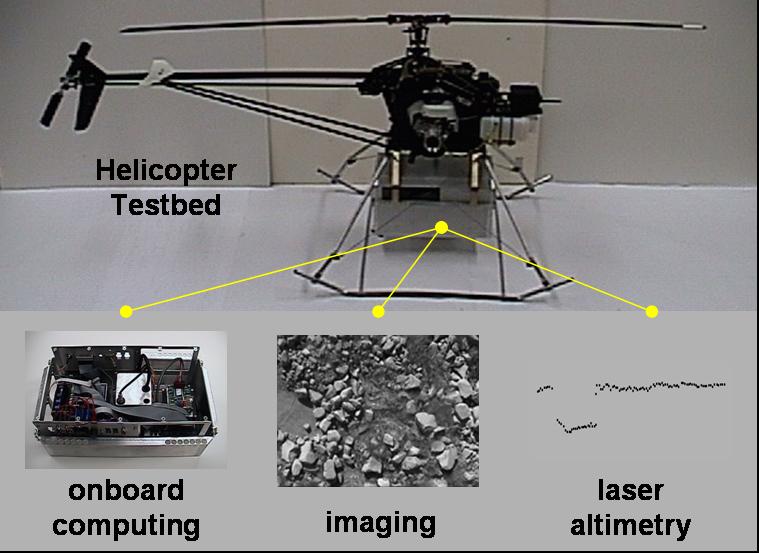

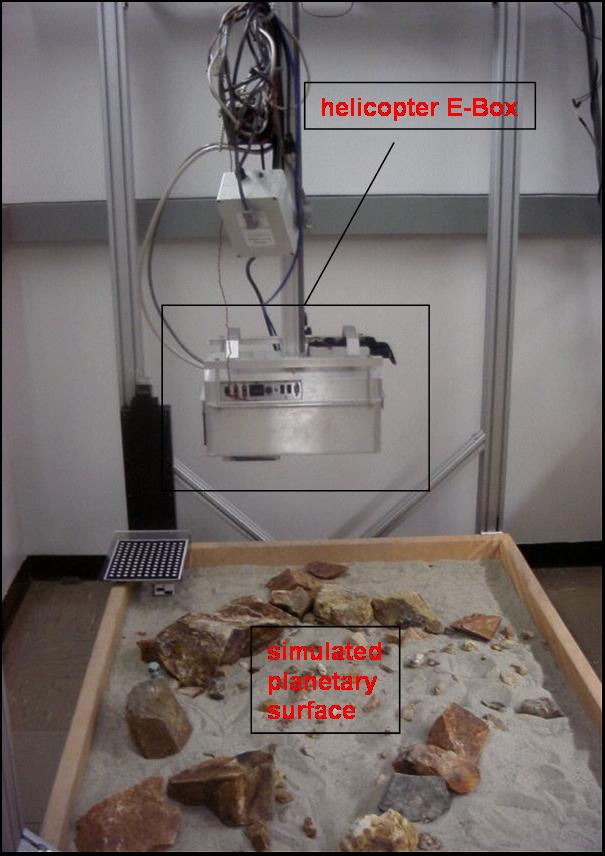

Inertial Navigation Systems (INS) based on the integration of Inertial Measurement Unit (IMU) signals and periodic updates from the Global Positioning System (GPS) has been successfully applied during the past decades to the problem of position tracking for both land and aerial vehicles. In cases where GPS signals are either unavailable (e.g. on the surface of Mars or indoors) or provide erroneous measurements due to the effect of multipathing (e.g. in the vicinity of tall structures) alternative sensors are required to correct the accumulated errors (drift) in the localization estimates. Cameras are light, small, passive – and thus energy efficient - sensors that have been successfully used to estimate the motion of a vehicle by tracking features between consecutive sets of images. Recent research on vision-based motion estimation has demonstrated the ability to estimate the linear and angular displacement of a vehicle using a single camera. Although these techniques are fairly accurate for short motions, they are susceptible to certain limitations such as (i) the inability to distinguish between small rotations and small translations, (ii) the loss of track during sudden motions, (iii) the scale ambiguity when the distance to the tracked features is unknown and (iv) the increased computational requirements when an initial estimate of the performed motion is not available. We address these issues by implementing an enhanced Kalman filter-based estimation scheme for combining navigation information from inertial sensors (IMU) with the visual feature-tracking information from a single camera. Velocity and pose estimates from the inertial sensors are used to resolve the small rotation/translation ambiguity in the motion estimates and provide an accurate initial estimate for the feature-tracking algorithm. This in effect significantly reduces the required time for convergence of the feature-tracking algorithm. In addition, when the field of view changes drastically or when a sufficient number of features are not available for reliably estimating displacements, the enhanced estimator is able to continue tracking the motion of the vehicle by relying solely on the signals from the IMU sensors. Finally, by enhancing the filter with visually extracted motion measurements, the short-term biases of the inertial sensors are estimated on-line and therefore the rate of positioning error accumulation is reduced. This filter is used for tracking the position and orientation of an aerial vehicle (autonomous helicopter) while descending and/or hovering above a potential landing site.

- Relevant Publications

- C1. S.I. Roumeliotis , A.E. Johnson, and J.F. Montgomery, "Augmenting Inertial Navigation with Image-Based Motion Estimation" . In Proc. 2002 IEEE International Conference on Robotics and Automation, Washington D.C., May 11-15, pp. 4326-33. (pdf)