Visual-Inertial Navigation System (VINS) using a Rolling-Shutter Camera

- Chao Guo, Dimitrios Kottas, Ryan DuToit, Ahmed Ahmed, Ruipeng Li, and Stergios Roumeliotis (PI)

-

In order to develop Vision-aided Inertial Navigation Systems (VINS) on mobile devices, such as cell

phones and tablets, one needs to consider two important issues, both due to the commercial-grade

underlying hardware:

- The rolling-shutter effect caused by CMOS sensors

- The unknown and varying time offset between the camera and IMU clocks

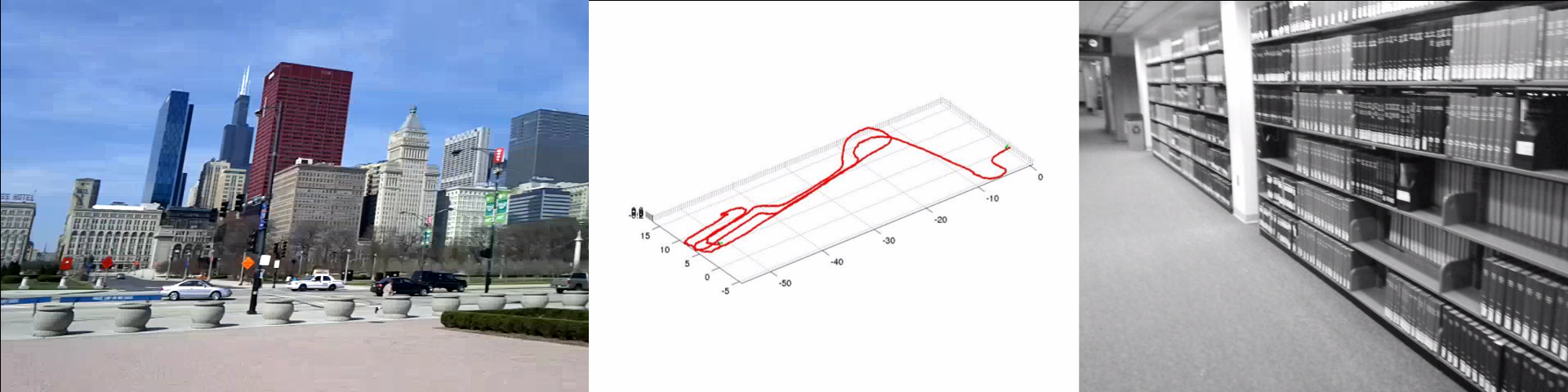

A comparison between global-shutter and rolling-shutter camera images

(from https://graphics.stanford.edu/papers/stabilization/karpenko_gyro.pdf)

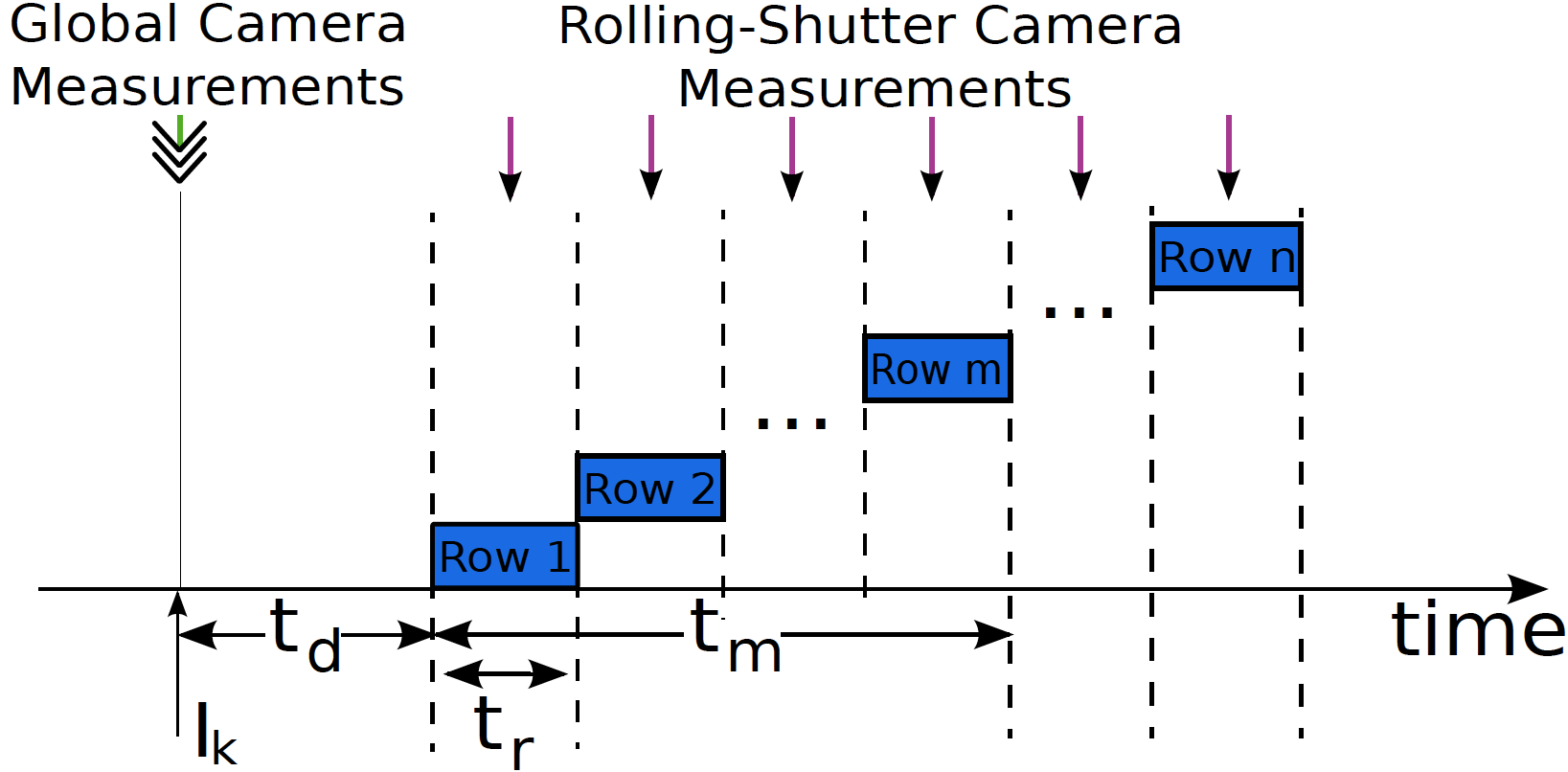

Rolling-shutter and time synchronization cause a time-varying offset between the IMU and camera measurements' arrival time

-

In this work, we present a high-precision VINS that explicitly considers and accounts for both the

rolling-shutter and time synchronization issues of an IMU-camera system. In particular, our main

contributions are:

- We introduce an interpolation model for expressing the camera pose of each visual measurement as a function of neighboring IMU poses, which introduces a significant speedup as compared to alternative constant-velocity-based models

- We analytically determine the system’s unobservable directions when applying our interpolation measurement model, and use them to improve the VINS consistency and accuracy by employing the observability-constrained extended Kalman filter (OC-EKF)

- C1. C.X. Guo, D.G. Kottas, R.C. DuToit, A. Ahmed, R. Li, and S.I. Roumeliotis, "Efficient Visual-Inertial Navigation using a Rolling-Shutter Camera with Inaccurate Timestamps," Robotics: Science and Systems Conference (RSS), Berkeley, CA, Jul. 12-16, 2014 (pdf).