Real-time Vision-aided Inertial Navigation

-

In this work we address the problem of pose estimation using measurements from an IMU and observations of visual features from a single camera.

Recent advances in the manufacturing of inertial sensors have made it possible to build small, inexpensive, and very accurate Inertial Measurement Units (IMUs), suitable for pose tracking in small-scale vehicles, such as mobile robots and UAVs. These systems often operate in urban environments where GPS signals are unreliable (the “urban canyon”), as well as indoors, in space, and in several other environments where global position measurements are unavailable. In such cases, cameras are ideal alternatives for aiding inertial navigation, due to their low cost, weight, and power consumption.

The main characteristic of vision sensing is that images are high-dimensional measurements. Feature extraction methods can typically detect and track hundreds of features in images, which, if properly used, can result is excellent localization results. However, the high volume of data also poses a significant challenge for estimation algorithm design. When real-time localization performance is required, one is faced with a fundamental trade-off between the computational complexity of an algorithm and the resulting estimation accuracy.

-

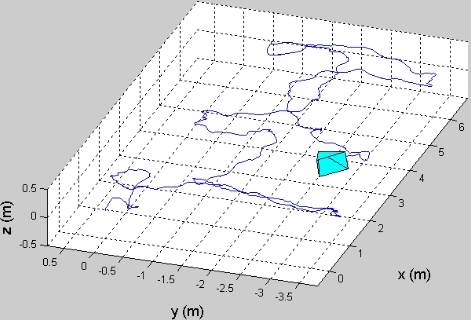

The main contribution of this work is an algorithm that is able to optimally utilize the localization information of the feature measurements, while having computational complexity only linear in the number of features. Our approach is motivated by the observation that, when a static feature is viewed from several camera poses, it is possible to define geometric constraints involving all these poses. The key towards achieving real-time performance is the derivation of a novel measurement model, which expresses these constraints without including the 3D feature position in the filter state vector. The resulting EKF-based estimation algorithm is capable of high-precision, real-time pose estimation, and thus can employed in demanding applications such as UAV localization, vision-aided navigation for precise spacecraft landing, tracking the pose of mobile robots, etc

-

The performance of this algorithm has been demonstrated in both in real-world experiments, and in extensive simulation tests. We here describe the results of: (i) a large-scale experiment in an uncontrolled urban environment, and (ii) an experiment in a typical office environment.

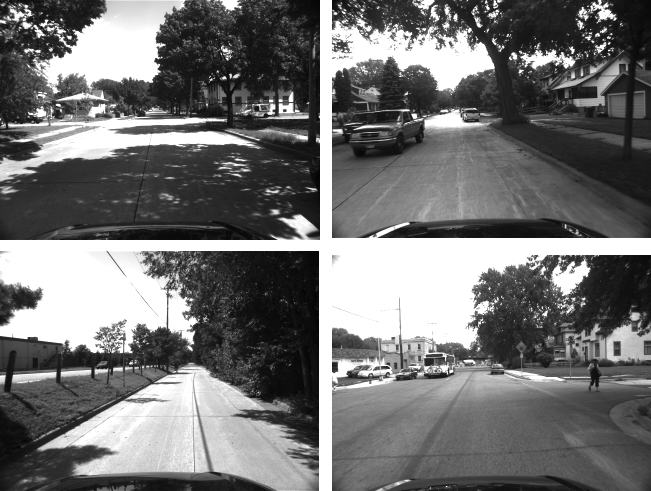

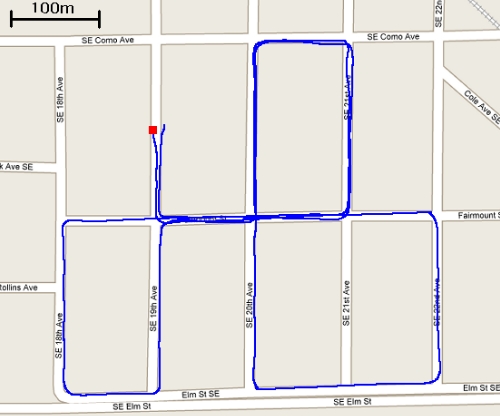

For the outdoor experiment an IMU/camera sensor payload was mounted on top of a car moving in a residential area of Minneapolis. The car traveled a total distance of approximately 3.2 km, over a time period of 9 min. The camera used for this experiment is an IEEE 1394 camera, recording images of resolution 640 x 480 pixels at a rate of 3 Hz, while the IMU is an Inertial Science ISIS IMU, recording inertial measurements at 100 Hz.

Some example images from the recorded dataset are displayed below:

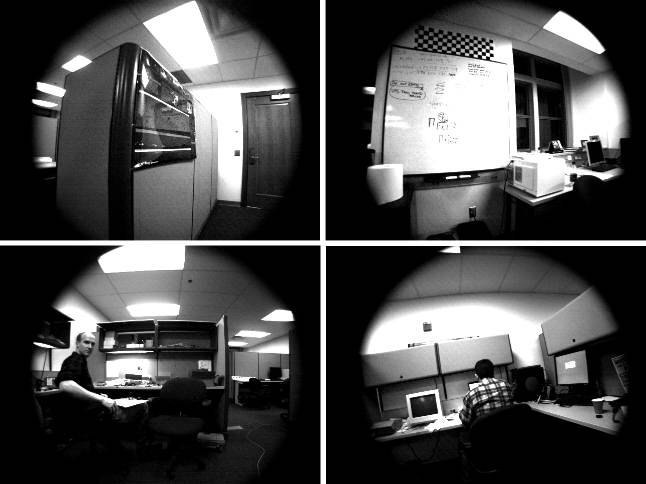

In addition to the above outdoor experiment, we have also tested the algorithm's performance in an indoors experiment. In this case, the IMU/camera system moves in a typical office setting (the main MARS lab office). Some example images from the sequence are the following:

>

- C1. A.I. Mourikis, S.I. Roumeliotis: "A Multi-state Constraint Kalman Filter for Vision-Aided Inertial Navigation," In Proceedings of the IEEE International Conference on Robotics and Automation, April 10-14 2007, Rome, Italy, pp. 3565-3572.

-

This work was supported by the University of Minnesota (DTC), the NASA Mars Technology Program (MTP-1263201), and the National Science Foundation (EIA-0324864, IIS-0643680).