Navigation, Localization, and Mapping Algorithms Overview

The MARS lab has introduced several state-of-the-art visual-inertial localization and mapping algorithms. A summary of these approaches is presented below.

| Algorithm | Positioning accuracy |

Num. of features mapped |

Real-time on mobile devices |

Computational Requirements |

Prior map requirements |

|---|---|---|---|---|---|

| Sliding-window VINS filters* | Low | Low | Yes | Low | No |

| C-KLAM | Med | Med | Yes | Med | No |

| IBLS | Med | Med | Yes | Med/High | No |

| BLS | High | High | No | Med/High | No |

| CM | High | High | No | High | No |

| Map-Based Localization | High | N/A | Yes | Low | Yes |

VINS on a Google glass

- Objective: 3D position and orientation (pose) tracking within an unknown area

- Processing: Online on a significantly CPU/memory limited device (e.g., Google glass, Bebop quadrotor)

- Optimization window: User poses in the past 2-3 sec (adjustable)

- Data: Visual-inertial measurements collected during the optimization window

- Image processing: Non-distinguishable features (e.g., FAST, Harris) tracked across sequences of images with KLT, or distinguishable features (e.g., FREAKs, ORBs) tracked based on descriptor matching

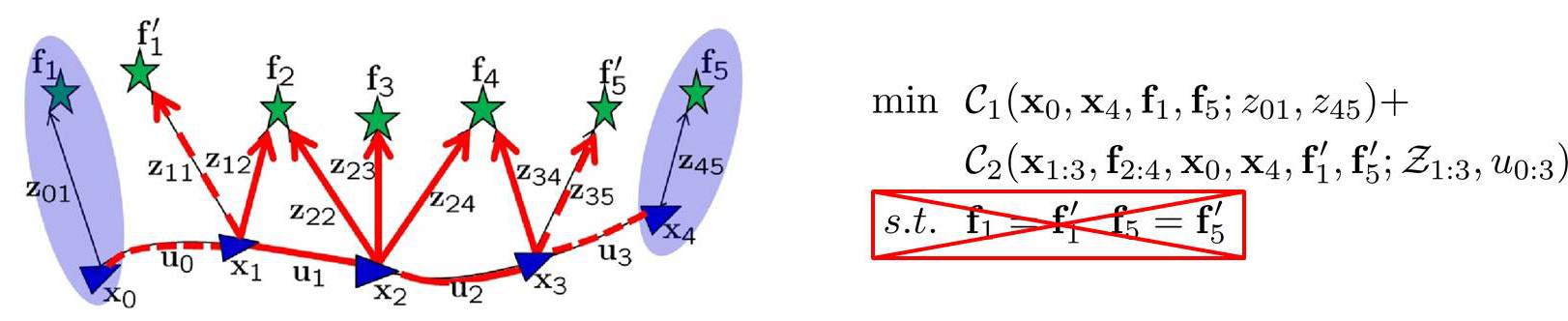

Algorithm illustration

- Objective: Highly-efficient and consistent, approximate batch least squares (BLS) for large-scale 3D localization and mapping

- Processing: Online on a modern cellphone/tablet

- Optimization window: Adjustable set of keyframes and key features

- Data: (i) Visual-inertial measurements involving keyframes and key features, and (ii) Pose constraints from marginalizing non-keyframes and non-key features

- Image processing: (i) Non-distinguishable features (e.g., FAST, Harris) tracked across sequences of images with KLT, and (ii) Distinguishable features (e.g., FREAKs, ORBs) matched between pairs of images using a vocabulary tree (VT)

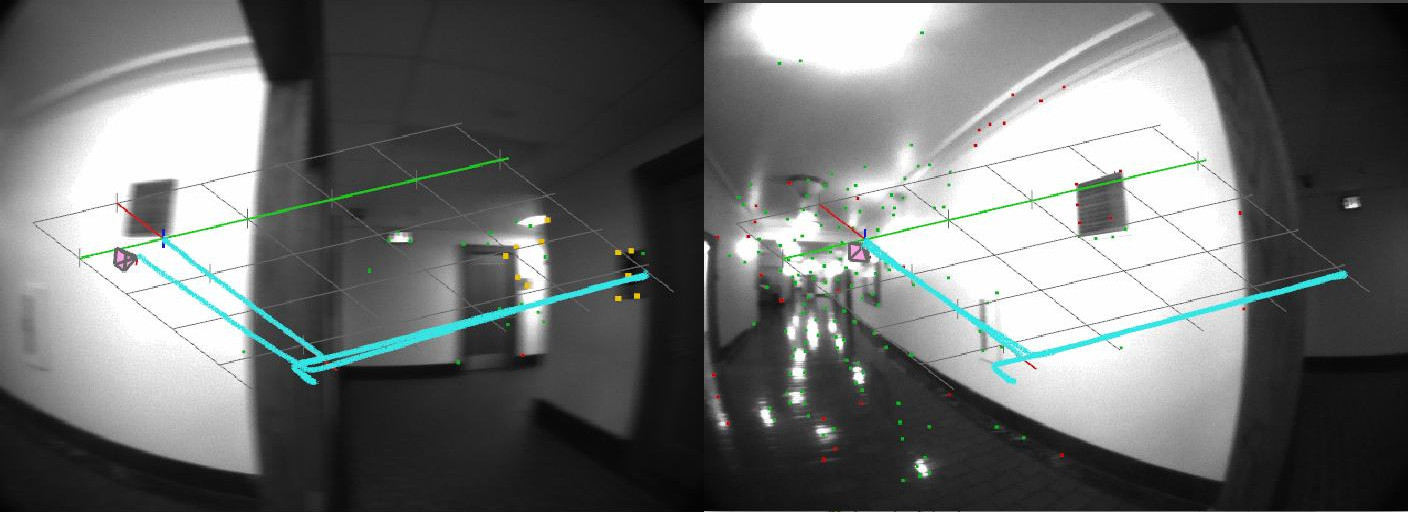

IBLS: (Left) Sliding-window VINS filter 3D pose estimated in real-time; (Right) Refined trajectory and map estimates computed in parallel and available intermittently

- Objective: High speed, improved accuracy 3D pose tracking and online incremental mapping

- Processing: Online on a modern cellphone/tablet

- Optimization window (VINS component): User poses in the past 2-3 sec (adjustable)

- Optimization window (BLS component): All user poses and features observed

- Data (BLS component): All visual-inertial measurements

- Image processing: (i) Non-distinguishable features (e.g., FAST, Harris) tracked across sequences of images with KLT, and (ii) Distinguishable features (e.g., FREAKs, ORBs) matched between pairs of images using a vocabulary tree (VT)

BLS-based mapping on a tablet

- Objective: Create a map of a large area (up to ~1 km of trajectory) using a modern tablet

- Processing: Offline on a modern tablet

- Optimization window: The user's trajectory and features observed

- Data: The visual-inertial measurements collected by the tablet

- Image processing: (i) Non-distinguishable features (e.g., FAST, Harris) tracked across sequences of images with KLT, and (ii) Distinguishable features (e.g., FREAKs, ORBs) matched between pairs of images using a vocabulary tree (VT)

CM: A high-accuracy 3D map of a building using data collected with multiple mobile devices (Manhattan lines shown here)

- Objective: Create a map of a large-scale area

- Processing: Offline on a high-end tablet/laptop

- Optimization window: All users' trajectories and features observed

- Data: Visual-inertial measurements collected from multiple devices

- Image processing: (i) Non-distinguishable features (e.g., FAST, Harris) tracked across sequences of images with KLT, (ii) Distinguishable features (e.g., FREAKs, ORBs) matched between pairs of images using a vocabulary tree (VT), and (iii) Line segments extracted and tracked across images

Map-based localization uses a previously built map of the area to localize the user in real time on a blueprint

- Objective: High-accuracy 3D pose tracking within a mapped area

- Processing: Online on a mobile device

- Optimization window: User poses in the past 2-3 sec (adjustable)

- Data: Visual-inertial measurements collected during the optimization window

- Image processing: (i) Non-distinguishable features (e.g., FAST, Harris) tracked across sequences of images with KLT, and (ii) Distinguishable features (e.g., FREAKs, ORBs) matched between the current and mapped images using a vocabulary tree (VT)